Cholecheck : Explainable AI-Based Multi-Class Classification of Gallbladder Diseases Using Ultrasound Images

Gallbladder diseases affect a significant portion of the global popula

tion, ranging from gallstones and cholecystitis to carcinoma. Accurate diagnosis

is critical but often delayed due to overlapping symptoms and limitations in ul

trasound imaging interpretation. This study proposes an advanced multi-class

classification system leveraging deep learning techniques to identify nine distinct

gallbladder disease types using ultrasound images. The methodology integrates

preprocessing techniques such as CLAHE and active contour segmentation to

enhance image quality and isolate regions of interest. A hybrid ensemble model

combining VGG16, ResNet152, and a custom CNN achieved superior perfor

mance with 99.8% accuracy and a Cohen Kappa score of 99.8%. Transfer learn

ing, feature fusion, and ensemble strategies were employed to improve robust

ness and generalization. Explainable AI (XAI) techniques like Grad-CAM and

LIME were incorporated to provide interpretable visualizations of the model’s

predictions, aiding clinical decision-making. The system was trained on the UI

DataGB dataset containing 10,692 annotated ultrasound images, ensuring high

reliability across diverse gallbladder conditions. Comparative benchmarking

demonstrates that the proposed model outperforms existing systems in accuracy

and classification depth. This research contributes a scalable, interpretable AI

driven diagnostic tool that enhances early detection and management of gallblad

der diseases while addressing challenges in medical imaging variability.

Neuro-Find: AI-Enhanced Early Detection of Cognitive Impairment using MRI

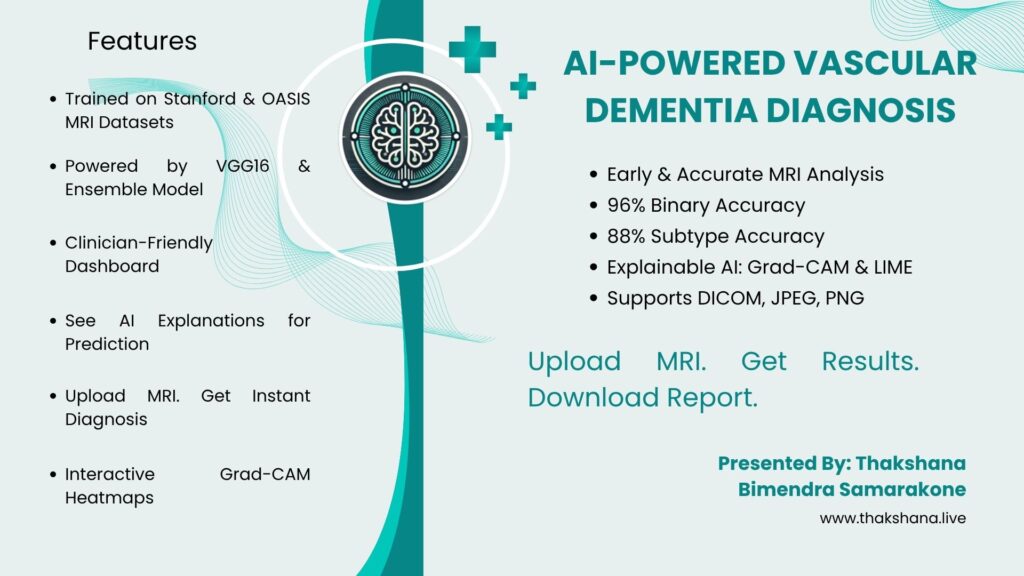

Vascular dementia (VaD) presents significant diagnostic challenges due to its heterogeneous clinical manifestations and the overlap of symptoms with other neurological disorders. Traditional diagnostic approaches rely heavily on expert interpretation of MRI scans, a process that is often time-consuming, subjective, and susceptible to inter-observer variability. To address these limitations, an AI-powered web application has been developed to facilitate rapid, accurate, and interpretable diagnosis of vascular dementia using brain MRI data.

This system employs advanced deep learning architectures, including VGG16 and DenseNet121, to perform both binary classification (distinguishing VaD-demented from non-demented cases) and multi-class subclassification into four VaD subtypes: Binswanger, Hemorrhagic, Strategic, and Subcortical dementia. The prototype demonstrated a testing accuracy of 96% for binary classification and 88.25% for subtype analysis. A distinctive feature of the system is the integration of explainable AI techniques, such as Grad-CAM and LIME, which provide visual and textual explanations to support clinical decision-making and foster trust in AI-driven outcomes.

The web-based interface supports DICOM, JPEG, and PNG formats, enabling clinicians to efficiently upload MRI scans, receive diagnostic predictions, and download comprehensive reports. By combining high diagnostic performance with transparency and user accessibility, this prototype aims to bridge the gap between artificial intelligence research and clinical practice, offering healthcare professionals a valuable tool for timely and reliable vascular dementia diagnosis and management

An Explainable Few-shot Early Defect Detection System for Production Lines

This research presents an explainable system for detecting defects in production lines using only a few sample images. It combines advanced machine learning techniques with visual explanations to quickly identify defects, helping manufacturers improve quality control with minimal training data and greater transparency in decision-making.

AMGAN: Attention-Driven Multi-Scale GAN with Complementary Learning Sub-Network for Generalized Non-Uniform Low-Light Image Enhancement

Low-light image enhancement (LLIE) is a critical component in computer vision pipelines, particularly in domains such as surveillance, autonomous driving, medical imaging, and photography. Images captured under poor illumination often suffer from low brightness, noise, and color distortions, which degrade the performance of downstream vision tasks. This project proposes AMGAN — an Attention-Driven Multi-Scale GAN integrated with a Complementary Learning Sub-Network (CLSN) — to address the complex challenges of LLIE, particularly under non-uniform lighting conditions.

The CLSN generates an inverse grey map that acts as an adaptive attention map, selectively enhancing underexposed regions while preserving well-lit areas. This attention-guided map is concatenated with the original low-light image to form an intermediate enhancement. The GAN’s generator, based on a U-Net architecture, extracts both spatial and frequency-domain features using Fast Fourier Transform (FFT), enabling fine-grained texture preservation and natural color consistency. A dual Markov discriminator ensures that both global and local image realism is maintained.

The model is trained and evaluated on benchmark datasets such as LSRW, LOLv1, LOLv2-real, and LOLv2-synthetic, achieving competitive performance in terms of PSNR, SSIM, and perceptual quality. Experimental results show that AMGAN balances enhancement quality with computational efficiency, making it a viable solution for real-world LLIE applications. Future work will explore architectural optimizations and potential domain-specific extensions.